FC Manual: An Overview

Fibre Channel (FC) manuals are crucial for understanding storage networks. Recent developments, like Marvell’s focus on FC-NVMe, highlight the technology’s evolution. These guides detail implementation, management, and advanced concepts.

What is Fibre Channel?

Fibre Channel (FC) is a dedicated, high-speed network technology primarily used for connecting storage devices. Unlike Ethernet, which evolved from general-purpose networking, Fibre Channel was specifically designed for storage, prioritizing reliability, low latency, and high bandwidth. It operates as a transport layer, enabling communication between servers and storage subsystems like disk arrays and tape libraries.

Historically, FC utilized specialized cabling and connectors, but advancements have led to convergence technologies like Fibre Channel over Ethernet (FCoE). However, traditional FC remains prevalent in enterprise environments demanding consistent performance. The core principle revolves around block-level data transfer, making it ideal for applications requiring rapid and dependable access to storage resources.

Recent industry trends, such as the rise of NVMe-oF, are significantly impacting Fibre Channel. Companies like Marvell are actively investing in enhancing FC’s capabilities to support NVMe protocols, bridging the gap between traditional storage networking and the speed of flash memory. Understanding FC’s foundational principles is essential for navigating these evolving storage landscapes.

History of Fibre Channel Technology

Fibre Channel’s origins trace back to the 1980s, initially developed by Digital Equipment Corporation (DEC) as a high-speed bus for connecting peripherals. Early iterations focused on addressing the limitations of existing storage interfaces like SCSI. The first standardized Fibre Channel protocol, FC-1, emerged in 1988, laying the groundwork for its widespread adoption.

Throughout the 1990s, FC gained traction in enterprise storage environments, driven by its superior performance compared to alternatives. Key advancements included the introduction of the Small Form-factor Pluggable (SFP) transceivers, enabling greater flexibility and scalability. The development of Fibre Channel switches further enhanced network capabilities, allowing for complex topologies and improved bandwidth utilization.

The 21st century witnessed continued evolution, with the emergence of 8Gbps, 16Gbps, and now 64Gbps Fibre Channel standards. More recently, the industry has focused on integrating FC with newer technologies like NVMe-oF, as exemplified by Marvell’s investments. This adaptation ensures Fibre Channel remains relevant in the face of evolving storage demands, bridging legacy infrastructure with cutting-edge performance.

FC-NVMe: The Next Generation

FC-NVMe represents a significant leap forward in storage technology, combining the reliability of Fibre Channel with the blazing speed of Non-Volatile Memory Express (NVMe). Traditional storage protocols often created bottlenecks, hindering NVMe’s full potential. NVMe-oF, and specifically its implementation over Fibre Channel, addresses this by providing a low-latency, high-bandwidth pathway for NVMe devices.

Marvell’s increased focus on FC-NVMe underscores its growing importance in the data center. This convergence allows organizations to leverage existing Fibre Channel infrastructure while benefiting from the performance advantages of NVMe SSDs. Key benefits include reduced latency, increased IOPS, and improved overall application responsiveness.

Implementing FC-NVMe requires compatible HBAs, switches, and NVMe-oF targets. Proper configuration and zoning are crucial for optimal performance and security. As data-intensive workloads continue to grow, FC-NVMe is poised to become a cornerstone of modern storage architectures, offering a compelling solution for demanding enterprise applications.

FC Manual: Core Components

Fibre Channel networks rely on HBAs, switches, and specialized cables; These components work together to create a robust and high-performance storage infrastructure, essential for modern data centers.

Fibre Channel Host Bus Adapters (HBAs)

Fibre Channel Host Bus Adapters (HBAs) are fundamental components enabling servers to connect to a Fibre Channel storage network. Essentially, they act as a bridge, translating data between the server’s operating system and the Fibre Channel fabric. HBAs are crucial for accessing storage area networks (SANs), providing high-speed, low-latency data transfer capabilities.

Modern HBAs support various speeds, including 8Gbps, 16Gbps, 32Gbps, and even 64Gbps, allowing for scalable performance as storage demands increase. They typically connect to servers via PCIe slots, offering significant bandwidth. Key features include hardware-based offloading of protocol processing, reducing CPU utilization on the server. This offloading enhances overall system performance and efficiency.

When selecting an HBA, factors like port count, supported speeds, and compatibility with the server and switch are vital. Dual-port HBAs provide redundancy, ensuring continued connectivity even if one port fails. Furthermore, advanced HBAs often include features like boot from SAN capabilities, allowing servers to boot directly from storage devices on the SAN. Proper HBA configuration and driver updates are essential for optimal performance and stability within the Fibre Channel environment.

Fibre Channel Switches

Fibre Channel switches are the central nervous system of a Storage Area Network (SAN), facilitating communication between servers and storage devices. These dedicated switches manage and direct data traffic, ensuring efficient and reliable data transfer. Unlike traditional network switches, Fibre Channel switches are specifically designed for the demanding requirements of storage networks, prioritizing low latency and high bandwidth.

Fibre Channel switches come in various form factors and port densities, ranging from entry-level switches for smaller environments to large, modular switches for enterprise-scale deployments. Key features include zoning, which logically isolates devices for security and performance, and fabric management capabilities, allowing administrators to monitor and configure the SAN.

Modern switches support features like Virtual Port Extensions (VPEs), enabling remote access to storage devices, and Fabric Vision, providing advanced monitoring and diagnostic tools. Redundancy is crucial; switches often include redundant power supplies and fans, and support for multiple paths to ensure high availability. Selecting the right switch involves considering port speed, scalability, management features, and compatibility with existing infrastructure. Proper switch configuration and firmware updates are vital for optimal SAN performance and stability.

Fibre Channel Cables and Connectors

Fibre Channel cables and connectors are critical components for establishing physical connections within a SAN. The choice of cabling significantly impacts performance, distance, and cost. Historically, copper cabling was common for short distances, but optical fiber has become the dominant medium due to its superior bandwidth and reach.

Several types of fiber optic cables are used, including multimode fiber (MMF) for shorter distances (up to 300 meters) and single-mode fiber (SMF) for longer distances (up to 10 kilometers or more). Connectors, such as LC, SC, and MPO, are used to terminate the cables and connect them to HBAs and switches. LC connectors are now the most prevalent due to their small form factor and high density.

Cable quality is paramount; low-loss cables minimize signal attenuation, ensuring reliable data transmission. Proper cable management is also essential to prevent damage and maintain signal integrity. Emerging standards support higher data rates, requiring cables specifically designed to handle increased bandwidth. When selecting cables, consider distance requirements, data rate needs, and compatibility with existing hardware. Regular inspection and replacement of damaged cables are vital for maintaining SAN reliability.

FC Manual: Protocols and Standards

Fibre Channel relies on protocols like FCP and SCSI for data transfer. NVMe-oF is emerging as a faster alternative. Understanding these standards is key to efficient SAN operation and management.

Fibre Channel Protocol (FCP)

Fibre Channel Protocol (FCP) is a data transport layer designed for high-speed data transfer over Fibre Channel networks. It encapsulates SCSI commands within Fibre Channel frames, enabling block-level storage access. Essentially, FCP allows servers to communicate with storage devices as if they were directly attached, despite being connected over a network.

Historically, FCP was the dominant protocol for storage networking, providing reliable and efficient data transmission. It operates on top of the Fibre Channel physical layer, leveraging its inherent capabilities for low latency and high bandwidth. The protocol defines how SCSI commands are formatted, addressed, and transported across the Fibre Channel fabric.

FCP utilizes a request/response model, where the initiator (typically a server) sends a SCSI command encapsulated in an FCP frame to the target (storage array). The target processes the command and sends a response back to the initiator, also encapsulated in an FCP frame. This process ensures data integrity and reliable communication.

While NVMe-oF is gaining traction, FCP remains widely deployed, particularly in environments with existing Fibre Channel infrastructure. Its maturity and proven reliability continue to make it a viable option for many storage applications. Understanding FCP is fundamental to managing and troubleshooting Fibre Channel storage networks.

Small Computer System Interface (SCSI)

Small Computer System Interface (SCSI) is a set of standards for physically connecting and transferring data between computers and peripheral devices. Historically, SCSI was the primary interface for hard drives, tape drives, and other storage devices before being largely superseded by SATA and SAS. However, SCSI remains critically important within the context of Fibre Channel (FC) networking.

Fibre Channel Protocol (FCP), as discussed, encapsulates SCSI commands for transmission over FC fabrics. This means that even though data travels over Fibre Channel, the underlying communication is still based on SCSI commands. Servers issue SCSI commands, which are then transported by FCP to the storage devices.

Different SCSI standards have evolved over time, including SCSI-1, SCSI-2, and SCSI-3, each offering improvements in speed and functionality. Ultra SCSI, Wide Ultra SCSI, and Ultra3 SCSI are examples of faster implementations. Understanding these variations can be helpful when dealing with legacy systems.

While direct SCSI connections are less common today, the SCSI command set remains fundamental to storage management. Modern storage arrays often emulate SCSI compatibility to ensure interoperability with older applications and operating systems. Therefore, a grasp of SCSI principles is essential for anyone working with Fibre Channel storage solutions.

NVMe over Fibre Channel (NVMe-oF)

NVMe over Fibre Channel (NVMe-oF) represents a significant advancement in storage technology, bridging the gap between the high-performance Non-Volatile Memory Express (NVMe) protocol and the reliable Fibre Channel network. Traditional storage protocols like FCP can introduce latency, hindering NVMe’s full potential. NVMe-oF directly transports NVMe commands over a Fibre Channel fabric, minimizing overhead and maximizing throughput.

Marvell’s increased investment in FC-NVMe, as reported, underscores the growing demand for this technology. It allows organizations to leverage existing FC infrastructure while benefiting from the speed and efficiency of NVMe SSDs. This is particularly valuable for latency-sensitive applications like databases, virtualization, and high-performance computing.

NVMe-oF utilizes RDMA (Remote Direct Memory Access) to enable direct data transfer between servers and storage devices, bypassing the CPU and reducing processing load. This results in lower latency and higher IOPS (Input/Output Operations Per Second). Different NVMe-oF fabrics exist, including FC-NVMe, RoCE (RDMA over Converged Ethernet), and TCP-NVMe.

Implementing NVMe-oF requires compatible HBAs, switches, and storage arrays. Proper configuration and zoning are crucial for optimal performance and security. As NVMe technology continues to evolve, NVMe-oF will play an increasingly important role in modern data centers.

FC Manual: Implementation and Management

Fibre Channel deployment requires careful planning. Zoning and LUN masking are vital for security and access control. Proper network configuration, alongside consistent performance monitoring, ensures optimal operation.

Zoning and LUN Masking

Zoning and LUN masking are fundamental security mechanisms within a Fibre Channel storage environment. They control access to storage resources, preventing unauthorized access and enhancing data protection. Zoning operates at the fabric level, logically dividing the Fibre Channel network into separate broadcast domains. This restricts communication between devices, ensuring that only authorized hosts can access specific storage targets.

There are two primary zoning types: hard zoning, which completely prevents communication between zones, and soft zoning, which allows some limited communication. Proper zoning design is crucial for performance and stability, avoiding unnecessary broadcast traffic and potential conflicts.

LUN masking complements zoning by controlling access at the storage array level. It defines which hosts can see and access specific Logical Unit Numbers (LUNs), representing storage volumes. While zoning restricts network-level connectivity, LUN masking governs access to the data itself. This layered approach provides robust security.

Effective implementation requires meticulous planning and documentation. Incorrectly configured zoning or LUN masking can lead to connectivity issues or, conversely, security vulnerabilities. Regular audits and adjustments are essential to maintain a secure and efficient Fibre Channel infrastructure, especially as storage requirements evolve.

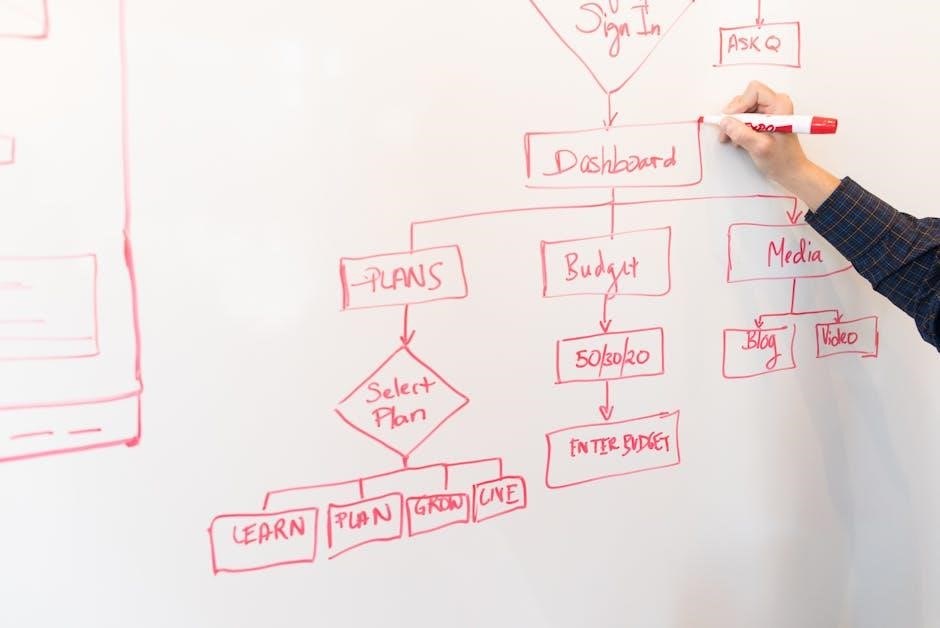

FC Network Configuration

Fibre Channel (FC) network configuration is a complex process demanding careful planning and execution. It begins with a thorough understanding of the storage requirements, including bandwidth, latency, and scalability. The physical topology – point-to-point, arbitrated loop, or switched fabric – significantly impacts performance and manageability. Switched fabrics are now the dominant architecture due to their superior scalability and flexibility.

Key configuration elements include World Wide Names (WWNs), unique identifiers for each FC device, and zoning, which logically segments the network for security and performance. Proper zoning prevents unauthorized access and minimizes broadcast traffic. Configuring Virtual Fabrics allows for further segmentation and isolation.

Quality of Service (QoS) settings are crucial for prioritizing critical applications. Bandwidth allocation and latency control ensure consistent performance. Monitoring tools are essential for identifying bottlenecks and optimizing network performance. Regular firmware updates for switches and HBAs are vital for stability and security.

Documentation is paramount. Maintaining accurate records of WWNs, zoning configurations, and QoS settings simplifies troubleshooting and future expansions. A well-configured FC network is the foundation for a reliable and high-performing storage infrastructure.

Performance Monitoring and Troubleshooting

Fibre Channel (FC) performance monitoring is critical for maintaining optimal storage infrastructure health. Key metrics include throughput, latency, error rates, and utilization of HBAs and switches. Specialized monitoring tools provide real-time visibility into network performance, identifying potential bottlenecks before they impact applications.

Troubleshooting often begins with identifying the source of the problem – HBA, switch, cable, or storage array. Analyzing error logs and utilizing diagnostic commands can pinpoint the root cause. Common issues include cabling problems, misconfigured zoning, and firmware incompatibilities.

Latency is a primary concern; high latency can severely degrade application performance. Tools like iperf can measure end-to-end latency. Throughput limitations often indicate bandwidth constraints or congestion. Examining switch port statistics reveals potential overload situations.

Regularly scheduled performance tests and proactive monitoring are essential. Establishing baseline performance metrics allows for quick identification of deviations. Proper documentation of troubleshooting steps and resolutions builds a valuable knowledge base for future incidents. A systematic approach to monitoring and troubleshooting ensures a stable and efficient FC environment.

FC Manual: Advanced Topics

Advanced Fibre Channel explores FCoE, bridging FC with Ethernet networks. NVMe-oF use cases are expanding, leveraging FC’s reliability. Future trends focus on increased speeds and efficiency.

Fibre Channel over Ethernet (FCoE)

Fibre Channel over Ethernet (FCoE) represents a significant advancement in storage networking, enabling Fibre Channel frames to be encapsulated and transported over Ethernet networks. This consolidation aims to reduce infrastructure costs by converging storage and data networks onto a single physical infrastructure. Traditionally, Fibre Channel required dedicated cabling and switches, creating a separate network from the standard IP-based Ethernet network.

FCoE leverages the lossless Ethernet capabilities, particularly Data Center Bridging (DCB), to ensure reliable transport of Fibre Channel traffic. DCB provides features like Priority Flow Control (PFC) and Enhanced Transmission Selection (ETS) to prioritize and guarantee bandwidth for storage traffic, preventing packet loss that could compromise data integrity. This is crucial as Fibre Channel is inherently loss-sensitive.

However, FCoE isn’t a simple replacement for traditional Fibre Channel. It requires specific hardware support, including FCoE-capable Network Interface Cards (NICs) and switches. Furthermore, the entire path between the server and storage must support FCoE to realize its benefits. While offering potential cost savings and simplified management, FCoE’s complexity and the need for specialized equipment have limited its widespread adoption compared to other technologies like iSCSI and NVMe-oF.

Understanding FCoE’s nuances is vital for network administrators designing and managing modern storage environments, particularly those seeking to consolidate their infrastructure and optimize resource utilization.

NVMe-oF Use Cases

NVMe over Fabrics (NVMe-oF) is rapidly expanding its application scope, driven by the demand for high-performance storage. One key use case is in all-flash arrays, where NVMe-oF unlocks the full potential of flash memory by eliminating bottlenecks associated with traditional protocols. This results in significantly lower latency and higher throughput, crucial for demanding workloads.

Another prominent application is in disaggregated storage architectures. NVMe-oF allows storage resources to be pooled and dynamically allocated to servers as needed, improving resource utilization and flexibility. This is particularly beneficial in cloud environments and data centers with fluctuating workloads.

High-Performance Computing (HPC) also benefits greatly from NVMe-oF. Applications requiring rapid data access, such as scientific simulations and data analytics, can leverage NVMe-oF to accelerate processing times. Marvell’s advancements in FC-NVMe further enhance these capabilities.

Furthermore, NVMe-oF is finding traction in virtualization environments, providing consistent and predictable performance for virtual machines. By delivering low-latency access to storage, NVMe-oF ensures a smooth and responsive user experience. As NVMe technology matures and becomes more affordable, its use cases will continue to proliferate across diverse industries.

Future Trends in Fibre Channel

Fibre Channel’s future is inextricably linked with NVMe-oF, as evidenced by Marvell’s increased investment in this area. We can anticipate continued optimization of FC-NVMe to deliver even greater performance and efficiency, solidifying its position in high-performance storage solutions.

A key trend is the convergence of Fibre Channel and Ethernet technologies. While FCoE faced challenges, advancements in networking infrastructure and protocol enhancements may lead to renewed interest in combining the benefits of both worlds. This could simplify network management and reduce costs.

Artificial Intelligence (AI) and Machine Learning (ML) workloads are driving demand for faster storage access. Fibre Channel, with its low latency and high bandwidth, is well-positioned to support these applications. Expect to see further innovations tailored to the specific needs of AI/ML environments.

Furthermore, increased focus on storage virtualization and disaggregated infrastructure will fuel the adoption of NVMe-oF over Fibre Channel. The ability to dynamically allocate storage resources and scale capacity on demand will become increasingly important. Ultimately, Fibre Channel’s evolution will center around enhancing performance, simplifying management, and adapting to the changing demands of modern data centers.